Agents

Use case

LLM-based agents are powerful general problem solvers.

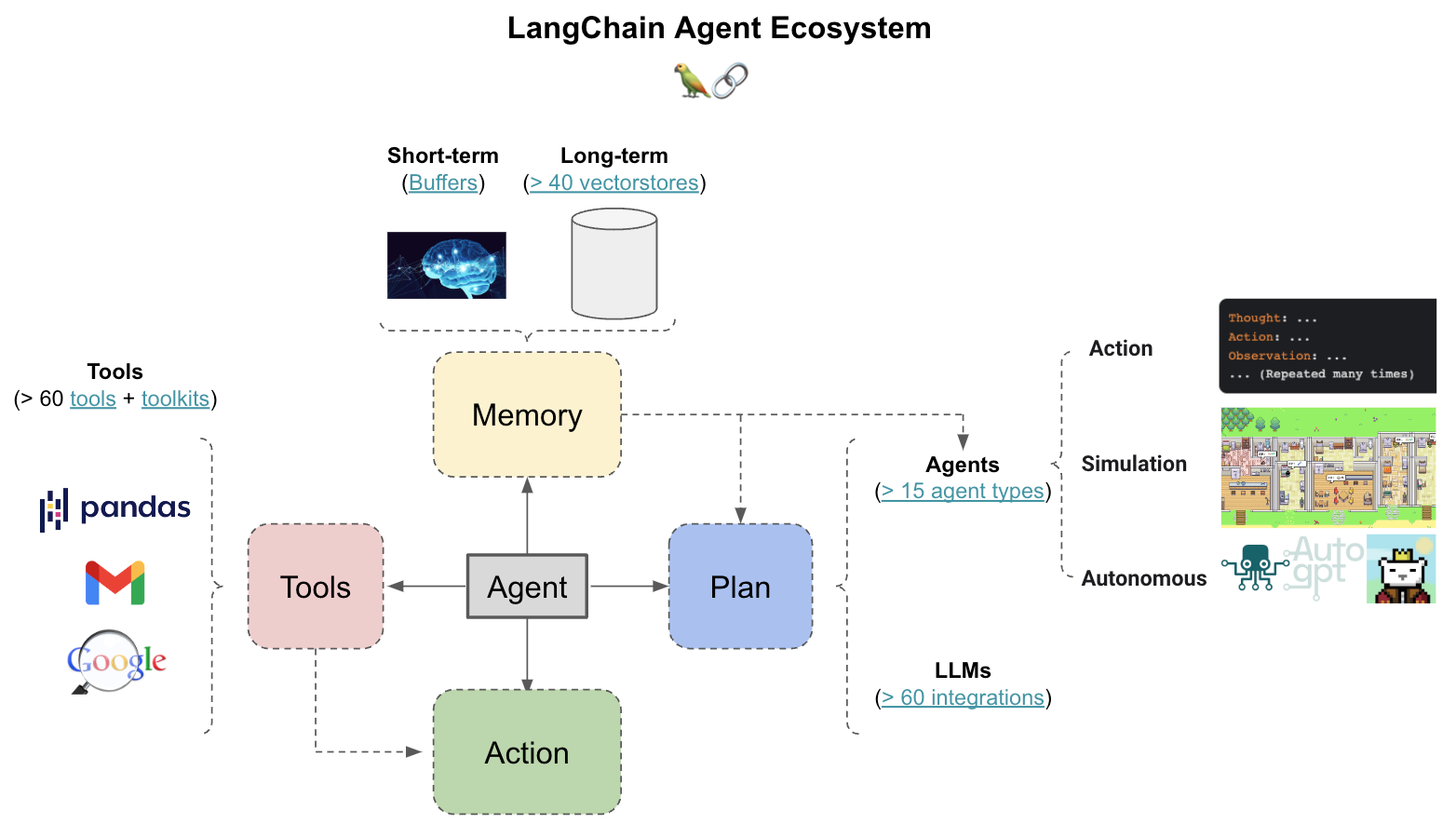

The primary LLM agent components include at least 3 things:

Planning: The ability to break down tasks into smaller sub-goalsMemory: The ability to retain and recall informationTools: The ability to get information from external sources (e.g., APIs)

Unlike LLMs simply connected to APIs, agents can:

- Self-correct

- Handle multi-hop tasks (several intermediate "hops" or steps to arrive at a conclusion)

- Tackle long time horizon tasks (that require access to long-term memory)

Overview

LangChain has many agent types.

Nearly all agents will use the following components:

Planning

Prompt: Can given the LLM personality, context (e.g, via retrieval from memory), or strategies for learninng (e.g., chain-of-thought).AgentResponsible for deciding what step to take next using an LLM with thePrompt

Memory

- This can be short or long-term, allowing the agent to persist information.

Tools

- Tools are functions that an agent can call.

But, there are some taxonomic differences:

Action agents: Designed to decide the sequence of actions (tool use) (e.g., OpenAI functions agents, ReAct agents).Simulation agents: Designed for role-play often in simulated enviorment (e.g., Generative Agents, CAMEL).Autonomous agents: Designed for indepdent execution towards long term goals (e.g., BabyAGI, Auto-GPT).

This will focus on Action agents.

Quickstart

pip install langchain openai google-search-results

# Set env var OPENAI_API_KEY and SERPAPI_API_KEY or load from a .env file

# import dotenv

# dotenv.load_dotenv()

Tools

LangChain has many tools for Agents that we can load easily.

Let's load search and a calcultor.

# Tool

from langchain.agents import load_tools

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(temperature=0)

tools = load_tools(["serpapi", "llm-math"], llm=llm)

API Reference:

Agent

The OPENAI_FUNCTIONS agent is a good action agent to start with.

OpenAI models have been fine-tuned to recognize when function should be called.

# Prompt

from langchain.agents import AgentExecutor

from langchain.schema import SystemMessage

from langchain.agents import OpenAIFunctionsAgent

system_message = SystemMessage(content="You are a search assistant.")

prompt = OpenAIFunctionsAgent.create_prompt(system_message=system_message)

# Agent

search_agent = OpenAIFunctionsAgent(llm=llm, tools=tools, prompt=prompt)

agent_executor = AgentExecutor(agent=search_agent, tools=tools, verbose=False)

# Run

agent_executor.run("How many people live in canada as of 2023?")

API Reference:

'As of 2023, the estimated population of Canada is approximately 39,858,480 people.'

Great, we have created a simple search agent with a tool!

Note that we use an agent executor, which is the runtime for an agent.

This is what calls the agent and executes the actions it chooses.

Pseudocode for this runtime is below:

next_action = agent.get_action(...)

while next_action != AgentFinish:

observation = run(next_action)

next_action = agent.get_action(..., next_action, observation)

return next_action

While this may seem simple, there are several complexities this runtime handles for you, including:

- Handling cases where the agent selects a non-existent tool

- Handling cases where the tool errors

- Handling cases where the agent produces output that cannot be parsed into a tool invocation

- Logging and observability at all levels (agent decisions, tool calls) either to stdout or LangSmith.

Memory

Short-term memory

Of course, memory is needed to enable conversation / persistence of information.

LangChain has many options for short-term memory, which are frequently used in chat.

They can be employed with agents too.

ConversationBufferMemory is a popular choice for short-term memory.

We set MEMORY_KEY, which can be referenced by the prompt later.

Now, let's add memory to our agent.

# Memory

from langchain.memory import ConversationBufferMemory

MEMORY_KEY = "chat_history"

memory = ConversationBufferMemory(memory_key=MEMORY_KEY, return_messages=True)

# Prompt w/ placeholder for memory

from langchain.schema import SystemMessage

from langchain.agents import OpenAIFunctionsAgent

from langchain.prompts import MessagesPlaceholder

system_message = SystemMessage(content="You are a search assistant tasked with using Serpapi to answer questions.")

prompt = OpenAIFunctionsAgent.create_prompt(

system_message=system_message,

extra_prompt_messages=[MessagesPlaceholder(variable_name=MEMORY_KEY)]

)

# Agent

search_agent_memory = OpenAIFunctionsAgent(llm=llm, tools=tools, prompt=prompt, memory=memory)

agent_executor_memory = AgentExecutor(agent=search_agent_memory, tools=tools, memory=memory, verbose=False)

agent_executor_memory.run("How many people live in Canada as of August, 2023?")

'As of August 2023, the estimated population of Canada is approximately 38,781,291 people.'

agent_executor_memory.run("What is the population of its largest provence as of August, 2023?")

'As of August 2023, the largest province in Canada is Ontario, with a population of over 15 million people.'

Looking at the trace, we can what is happening:

- The chat history is passed to the LLMs

- This gives context to

itsinWhat is the population of its largest provence as of August, 2023? - The LLM generates a function call to the search tool

function_call:

name: Search

arguments: |-

{

"query": "population of largest province in Canada as of August 2023"

}

- The search is executed

- The results frum search are passed back to the LLM for synthesis into an answer

Long-term memory

Vectorstores are great options for long-term memory.

import faiss

from langchain.vectorstores import FAISS

from langchain.docstore import InMemoryDocstore

from langchain.embeddings import OpenAIEmbeddings

embedding_size = 1536

embeddings_model = OpenAIEmbeddings()

index = faiss.IndexFlatL2(embedding_size)

vectorstore = FAISS(embeddings_model.embed_query, index, InMemoryDocstore({}), {})

API Reference:

Going deeper

- Explore projects using long-term memory, such as autonomous agents.

Tools

As mentioned above, LangChain has many tools for Agents that we can load easily.

We can also define custom tools. For example, here is a search tool.

- The

Tooldataclass wraps functions that accept a single string input and returns a string output. return_directdetermines whether to return the tool's output directly.- Setting this to

Truemeans that after the tool is called, theAgentExecutorwill stop looping.

from langchain.agents import Tool, tool

from langchain.utilities import GoogleSearchAPIWrapper

search = GoogleSearchAPIWrapper()

search_tool = [

Tool(

name="Search",

func=search.run,

description="useful for when you need to answer questions about current events",

return_direct=True,

)

]

API Reference:

To make it easier to define custom tools, a @tool decorator is provided.

This decorator can be used to quickly create a Tool from a simple function.

# Tool

@tool

def get_word_length(word: str) -> int:

"""Returns the length of a word."""

return len(word)

word_length_tool = [get_word_length]

Going deeper

Toolkits

- Toolkits are groups of tools needed to accomplish specific objectives.

- Here are > 15 different agent toolkits (e.g., Gmail, Pandas, etc).

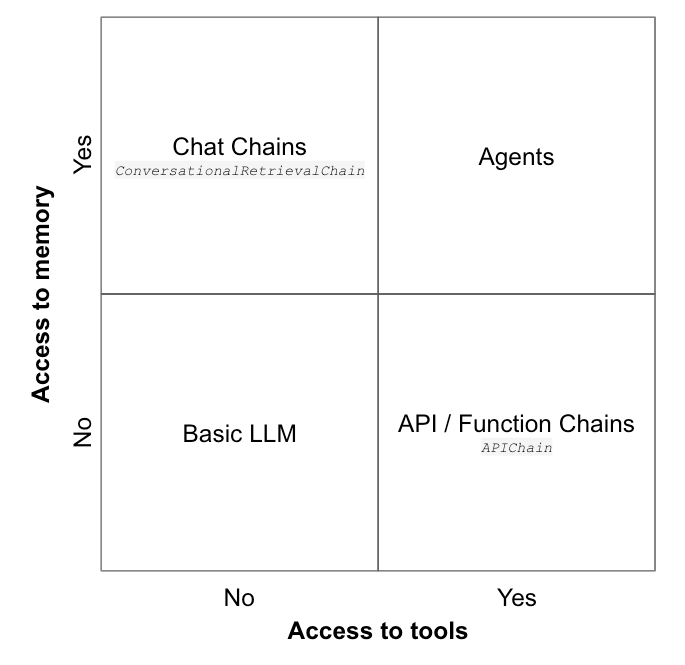

Here is a simple way to think about agents vs the various chains covered in other docs:

Agents

There's a number of action agent types available in LangChain.

- ReAct: This is the most general purpose action agent using the ReAct framework, which can work with Docstores or Multi-tool Inputs.

- OpenAI functions: Designed to work with OpenAI function-calling models.

- Conversational: This agent is designed to be used in conversational settings

- Self-ask with search: Designed to lookup factual answers to questions

OpenAI Functions agent

As shown in Quickstart, let's continue with OpenAI functions agent.

This uses OpenAI models, which are fine-tuned to detect when a function should to be called.

They will respond with the inputs that should be passed to the function.

But, we can unpack it, first with a custom prompt:

# Memory

MEMORY_KEY = "chat_history"

memory = ConversationBufferMemory(memory_key=MEMORY_KEY, return_messages=True)

# Prompt

from langchain.schema import SystemMessage

from langchain.agents import OpenAIFunctionsAgent

system_message = SystemMessage(content="You are very powerful assistant, but bad at calculating lengths of words.")

prompt = OpenAIFunctionsAgent.create_prompt(

system_message=system_message,

extra_prompt_messages=[MessagesPlaceholder(variable_name=MEMORY_KEY)]

)

API Reference:

Define agent:

# Agent

from langchain.agents import OpenAIFunctionsAgent

agent = OpenAIFunctionsAgent(llm=llm, tools=word_length_tool, prompt=prompt)

API Reference:

Run agent:

# Run the executer, including short-term memory we created

agent_executor = AgentExecutor(agent=agent, tools=word_length_tool, memory=memory, verbose=False)

agent_executor.run("how many letters in the word educa?")

'There are 5 letters in the word "educa".'

ReAct agent

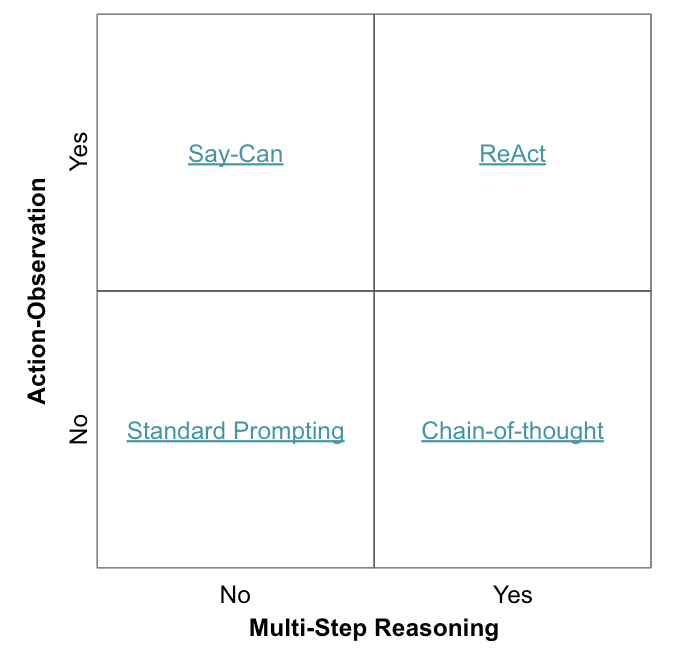

ReAct agents are another popular framework.

There has been lots of work on LLM reasoning, such as chain-of-thought prompting.

There also has been work on LLM action-taking to generate obervations, such as Say-Can.

ReAct marries these two ideas:

It uses a charecteristic Thought, Action, Observation pattern in the output.

We can use initialize_agent to create the ReAct agent from a list of available types here:

* AgentType.ZERO_SHOT_REACT_DESCRIPTION: ZeroShotAgent

* AgentType.REACT_DOCSTORE: ReActDocstoreAgent

* AgentType.SELF_ASK_WITH_SEARCH: SelfAskWithSearchAgent

* AgentType.CONVERSATIONAL_REACT_DESCRIPTION: ConversationalAgent

* AgentType.CHAT_ZERO_SHOT_REACT_DESCRIPTION: ChatAgent

* AgentType.CHAT_CONVERSATIONAL_REACT_DESCRIPTION: ConversationalChatAgent

* AgentType.STRUCTURED_CHAT_ZERO_SHOT_REACT_DESCRIPTION: StructuredChatAgent

* AgentType.OPENAI_FUNCTIONS: OpenAIFunctionsAgent

* AgentType.OPENAI_MULTI_FUNCTIONS: OpenAIMultiFunctionsAgent

from langchain.agents import AgentType

from langchain.agents import initialize_agent

MEMORY_KEY = "chat_history"

memory = ConversationBufferMemory(memory_key=MEMORY_KEY, return_messages=True)

react_agent = initialize_agent(search_tool, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=False, memory=memory)

API Reference:

react_agent("How many people live in Canada as of August, 2023?")

react_agent("What is the population of its largest provence as of August, 2023?")

LangSmith can help us run diagnostics on the ReAct agent:

The ReAct agent fails to pass chat history to LLM, gets wrong answer.

The OAI functions agent does and gets right answer, as shown above.

Also the search tool result for ReAct is worse than OAI.

Collectivly, this tells us: carefully inspect Agent traces and tool outputs.

As we saw with the SQL use case, ReAct agents can be work very well for specific problems.

But, as shown here, the result is degraded relative to what we see with the OpenAI agent.

Custom

Let's peel it back even further to define our own action agent.

We can create a custom agent to unpack the central pieces:

Tools: The tools the agent has available to useAgent: decides which action to take

from typing import List, Tuple, Any, Union

from langchain.schema import AgentAction, AgentFinish

from langchain.agents import Tool, AgentExecutor, BaseSingleActionAgent

class FakeAgent(BaseSingleActionAgent):

"""Fake Custom Agent."""

@property

def input_keys(self):

return ["input"]

def plan(

self, intermediate_steps: List[Tuple[AgentAction, str]], **kwargs: Any

) -> Union[AgentAction, AgentFinish]:

"""Given input, decided what to do.

Args:

intermediate_steps: Steps the LLM has taken to date,

along with observations

**kwargs: User inputs.

Returns:

Action specifying what tool to use.

"""

return AgentAction(tool="Search", tool_input=kwargs["input"], log="")

async def aplan(

self, intermediate_steps: List[Tuple[AgentAction, str]], **kwargs: Any

) -> Union[AgentAction, AgentFinish]:

"""Given input, decided what to do.

Args:

intermediate_steps: Steps the LLM has taken to date,

along with observations

**kwargs: User inputs.

Returns:

Action specifying what tool to use.

"""

return AgentAction(tool="Search", tool_input=kwargs["input"], log="")

fake_agent = FakeAgent()

fake_agent_executor = AgentExecutor.from_agent_and_tools(agent=fake_agent,

tools=search_tool,

verbose=False)

fake_agent_executor.run("How many people live in canada as of 2023?")

"The current population of Canada is 38,808,843 as of Tuesday, August 1, 2023, based on Worldometer elaboration of the latest United Nations data 1. Canada 2023\xa0... Mar 22, 2023 ... Record-high population growth in the year 2022. Canada's population was estimated at 39,566,248 on January 1, 2023, after a record population\xa0... Jun 19, 2023 ... As of June 16, 2023, there are now 40 million Canadians! This is a historic milestone for Canada and certainly cause for celebration. It is also\xa0... Jun 28, 2023 ... Canada's population was estimated at 39,858,480 on April 1, 2023, an increase of 292,232 people (+0.7%) from January 1, 2023. The main driver of population growth is immigration, and to a lesser extent, natural growth. Demographics of Canada · Population pyramid of Canada in 2023. May 2, 2023 ... On January 1, 2023, Canada's population was estimated to be 39,566,248, following an unprecedented increase of 1,050,110 people between January\xa0... Canada ranks 37th by population among countries of the world, comprising about 0.5% of the world's total, with over 40.0 million Canadians as of 2023. The current population of Canada in 2023 is 38,781,291, a 0.85% increase from 2022. The population of Canada in 2022 was 38,454,327, a 0.78% increase from 2021. Whether a given sub-nation is a province or a territory depends upon how its power and authority are derived. Provinces were given their power by the\xa0... Jun 28, 2023 ... Index to the latest information from the Census of Population. ... 2023. Census in Brief: Multilingualism of Canadian households\xa0..."

Runtime

The AgentExecutor class is the main agent runtime supported by LangChain.

However, there are other, more experimental runtimes for autonomous_agents:

- Plan-and-execute Agent

- Baby AGI

- Auto GPT

Explore more about:

Simulation agents: Designed for role-play often in simulated enviorment (e.g., Generative Agents, CAMEL).Autonomous agents: Designed for indepdent execution towards long term goals (e.g., BabyAGI, Auto-GPT).